Data Science Foundations

Session 7: Boosting¶

Instructor: Wesley Beckner

Contact: wesleybeckner@gmail.com

In this session, we're continuing on the topic of supervised learning an ensemble learning method called boosting.

7.1 Preparing Environment and Importing Data¶

7.1.1 Import Packages¶

from sklearn import svm

from sklearn.datasets import make_blobs, make_circles

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import AdaBoostClassifier, GradientBoostingClassifier

import matplotlib.pyplot as plt

import numpy as np

import plotly.express as px

from ipywidgets import interact, FloatSlider, interactive

def plot_boundaries(X, clf, ax=False):

plot_step = 0.02

x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1

y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, plot_step),

np.arange(y_min, y_max, plot_step))

Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

if ax:

cs = ax.contourf(xx, yy, Z, cmap='viridis', alpha=0.2)

ax.scatter(X[:,0], X[:,1], c=y, cmap='viridis', edgecolor='grey', alpha=0.9)

return ax

else:

cs = plt.contourf(xx, yy, Z, cmap='viridis', alpha=0.2)

plt.scatter(X[:,0], X[:,1], c=y, cmap='viridis', edgecolor='grey', alpha=0.9)

7.1.2 Load Dataset¶

For this session, we will use dummy datasets from sklearn.

7.2 Boosting¶

The last supervised learning algorithms we will cover, are the boosting learners. Similar to Bagging, Boosting algorithms leverage the idea of training on variations of the available data, only this time they do so in serial rather than parallel.

What do I mean by this?

It's a little nuanced, but the idea is straight forward. The first model trains on the dataset, it generates some error. The datapoints creating the greatest amount of error are emphasized in the second round of training, and so on, as the sequence of models proceeds, ever troublesome datapoints receive ever increasing influence.

7.2.1 AdaBoost¶

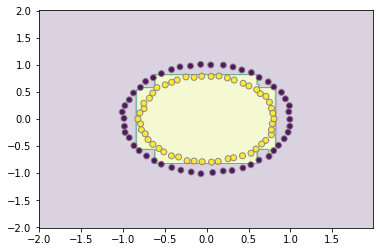

AdaBoost was the first boosting learner of its kind. It's weak learners (the things that are stitched together in serial) are typically stumps or really shallow decision trees. Lets create some data and fit an AdaBoostClassifier to it:

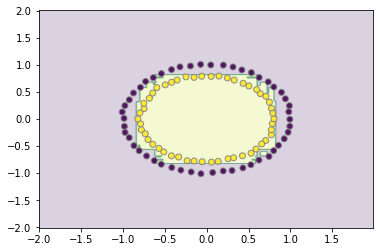

X, y = make_circles(random_state=42, noise=.01)

clf = AdaBoostClassifier(DecisionTreeClassifier(max_depth=3))

clf.fit(X,y)

plot_boundaries(X, clf)

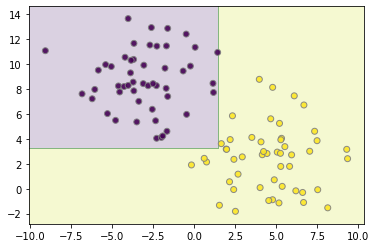

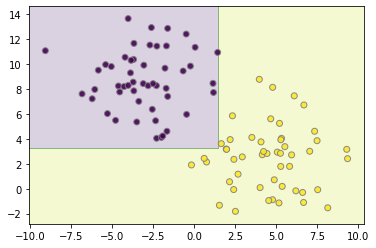

Trying with a different distribution of data:

X, y = make_blobs(random_state=42, centers=2, cluster_std=2.5)

clf = AdaBoostClassifier(DecisionTreeClassifier(max_depth=5))

clf.fit(X,y)

plot_boundaries(X, clf)

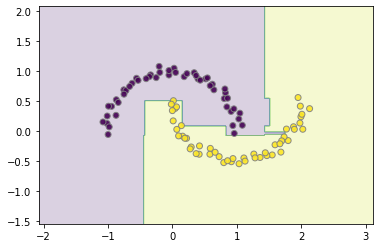

And now with make_moons:

from sklearn.datasets import make_moons

X, y = make_moons(random_state=42, noise=.05)

clf = AdaBoostClassifier()

clf.fit(X,y)

plot_boundaries(X, clf)

7.2.1 Gradient Boosting¶

Gradient Boosting builds on the idea of AdaBoost. The term gradient implies that 2 or more derivatives are being taken somewhere. What this is referring to, is while AdaBoost is subject to a predefined loss function, Gradient Boosting can take in any arbitrary (as long as it is differentiable) loss function, to coordinate the training of its weak learners.

X, y = make_circles(random_state=42, noise=.01)

clf = GradientBoostingClassifier(loss='deviance')

clf.fit(X,y)

plot_boundaries(X, clf)

X, y = make_blobs(random_state=42, centers=2, cluster_std=2.5)

clf = GradientBoostingClassifier()

clf.fit(X,y)

plot_boundaries(X, clf)

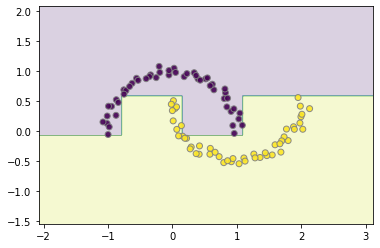

from sklearn.datasets import make_moons

X, y = make_moons(random_state=42, noise=.05)

clf.fit(X,y)

plot_boundaries(X, clf)